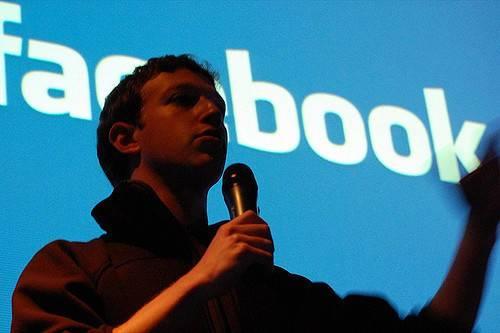

Last year, at the height of the presidential campaigns, Facebook CEO Mark Zuckerberg found himself in an unwinnable dilemma: Donald Trump, Facebook employees said, was promoting hate speech. His presidential candidate speeches were advocating a ban on Muslims and promoting selective treatment based on religion. They should be taken down, Zuckerberg was told.

But the Facebook CEO eventually decided to let the posts stand. Taking down a presidential candidate's posts not only cuts into valuable revenue (at the time, the social media company was looking at a minimum of $300 million in revenue from such promotions), it can be misinterpreted as a partisan move to squelch a candidate's platform.

"When we review reports of content that may violate our policies, we take context into consideration," a Facebook representative told Business Insider. That context in this case, the company defended, was "political discourse," and the right of people to express opinions freely without having their posts taken down.

That decision is now coming back to haunt Facebook. Although taking down the posts might have given critics the ability to accuse Facebook of partisan behavior, keeping them up became fodder for another problem -- one that ProPublica writer Julia Angwin says has is ongoing. The policy itself -- ProPublica claimes -- is biased.

According to fairly detailed research, the nonprofit publication found that grown white men are less likely to have hate posts taken down by Facebook than African American children.

And it isn't just the controversial words of the President that are raising questions about the unequal lens through which hate speech incidents are viewed.

In June, following the shocking news of a terrorist attack in London, Rep. Clay Higgins provided his provocative "answer" to "radicalized" Muslims: "Kill them all. For the sake of all that is good and righteous. Kill them all." He didn't offer an explanation of how those doing the hunting should ensure that innocent Muslim citizens weren't caught in the fray, and his post wasn't taken down.

Compare that, said ProPublica, with what happened when Black Lives Matter activist Didi Delgado posted "All white people are racist. Start from this reference point, or you've already failed." The post was taken down and Delgado was temporarily blocked from posting on Facebook. Delgado objected and launched a petition on Change.org.

Angwin pointed out that it wasn't a question of whether the posting was offensive or not, but the apparent double-standard between what happened with Higgins' post and Delgado's.

But according to documents ProPublica obtained from Facebook, there was a reason for that double-standard, and it had to do with the sub-set of individuals that were targeted by the hate speech.

"Higgins’ incitement to violence passed muster because it targeted a specific sub-group of Muslims — those that are 'radicalized,'" said Angwin, whereas Delgago's statement was a sweeping statement about one race of people that in Facebook's view, could potentially incite violence against that target group as a whole.

So was a cover page posted by San Francisco resident Phyllis Meehan. According to the social media site, posting the title "All Men are Trash" can get your page taken down and your access blocked for 24 hours. Again, it targets a broad section of the population, not a sub-set of men. And as Gizmodo points out, Facebook was fairly consistent in enforcing this policy. Each one of Meehan's friends that reposted the statement in protest found their posts taken down, and that includes a male Facebook poster who took exception to the guidelines.

For Facebook, monitoring hate speech isn't a simple process and is complicated by the fact that it is constantly forced to consider varying laws around the world that affect how it enforces standards. Both Facebook and Twitter have run amok of European laws which show far less tolerance for hate speech than those in the US.

Still, Facebook argues that its onerous list of rules points to its effort to monitor both hate speech and the right to free expression. The policy limits hate speech toward protected groups of individuals (largely those defined by sex, religious affiliation, race, national origin, gender identity, sexual orientation, ethnicity or serious disability or disease), but otherwise airs on the side of protecting speech. So criticizing a religion may offend readers but won't necessarily get you blocked. Criticizing those who affiliate with that religion -- who could become targets to violence -- however, may.

ProPublica also found that reasons for take-downs aren't always clear and predictable. "Users whose posts are removed are not usually told what rule they have broken, and they cannot generally appeal Facebook’s decision," notes Angwin, although some users, like Leslie Mac have found that publicity does tend to influence Facebook's decision-making. After her post, which began with "White folks, ..." was taken down, she found herself the topic of a TechCrunch post, which criticized Facebook's actions. The social media giant apologized and reinstated her post.

And she isn't the only one who has prompted a change of heart by going public. Letters of criticism from Norway, Morocco and other venues calling out Zuckerberg specifically have turned the tide and helped to ensure that Facebook's hate speech criteria is modified. Those criticisms have also forced the company to clarify what its perspective is on offensive material.

While Facebook protected Trump's right as a presidential candidate to post controversial statements about Muslims, like "calling for a total and complete shutdown of Muslims entering the United States ..." his administration has been forced to rethink the practicality of such statements. A federal judge eventually turned the attention to Trump's social media comments when he pointed out earlier this year that those statements served to validate Trump's intention to discriminate against all Muslim immigrants.

Still, some would argue that Facebook should have taken down this statement since it lobbied against a protected religious group and that Facebook in effect, violated its own rules. And that's left some readers to wonder whether it is really protected categories that Facebook is shielding, or the revenue it earns from powerful voices.

Flickr image: Andrew Feinberg

Jan Lee is a former news editor and award-winning editorial writer whose non-fiction and fiction have been published in the U.S., Canada, Mexico, the U.K. and Australia. Her articles and posts can be found on TriplePundit, JustMeans, and her blog, The Multicultural Jew, as well as other publications. She currently splits her residence between the city of Vancouver, British Columbia and the rural farmlands of Idaho.